We present major highlights subsequent to 1979 both within this Introduction and throughout the Tutorial. Some of these highlights include short summaries of major space-based programs such as launching several other satellite/sensor systems similar to Landsat; inserting radar systems into space; proliferating of weather satellites; launching a series of specialized satellites to monitor the environment using, among other, thermal and passive microwave sensors; developing sophisticated hyperspectral sensors; and deploying a variety of sensors to gather imagery and other data on the planets and astronomical bodies.

The photographic camera has served as a prime remote sensor for more than 150 years. It captures an image of targets exterior to it by concentrating electromagnetic (EM) radiation (normally, visible light) through a lens onto a recording medium (typically silver-based film). The film displays the target objects in their relative positions by variations in their brightness of gray levels (black and white) or color tones. Although the first, rather primitive photographs were taken as "stills" on the ground, the idea photographing the Earth's surface from above, yielding the so-called aerial photo, emerged in the 1840s with pictures from balloons. By the first World War, cameras mounted on airplanes provided aerial views of fairly large surface areas that were invaluable for military reconnaissance. From then until the early 1960s, the aerial photograph remained the single standard tool for depicting the surface from a vertical or oblique perspective.

Remote sensing above the atmosphere

originated early in the space age (both Russian and American programs). At first,

by 1946, some V-2 rockets, acquired from Germany after World War II, were launched

by the U.S. Army from White Sands, New Mexico, to high altitudes. These were referred

to as the Viking program (a named used again for Mars landers). These rockets,

while not attaining orbit, contained automated still or movie cameras that took

pictures as the vehicle ascended. Here is an example of a typical oblique picture,

looking across Arizona and the Gulf of California to the curving Earth horizon

(this is shown again in Section 12). (Note: the writer [NMS], as an Army corporal

stationed at Fort Bliss in El Paso, TX was assigned as a Post newpaper reporter

privileged in Spring 1947 to attend a V-2 launch at White Sands and to interview

Werner von Braun, the father of the German V-2 program; little did I know then

that I would be heavily involved in America's space program in my career years.)

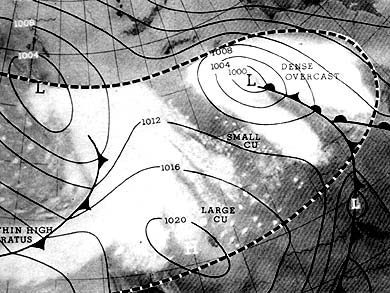

The first non-photo camera

sensors mounted on unmanned spacecraft were aboard satellites devoted mainly

to looking at clouds. The first U.S. meteorological satellite, TIROS-1, launched

by an Atlas rocket into orbit on April 1, 1960, looked similar to this later

TIROS vehicle. TIROS, for Television Infrared

Observation Satellite, used vidicon cameras to scan wide areas at a time. The

image below is one of the first (May 9, 1960) returned by TIROS-1 (10 satellites

in this series were flown, followed by the TOS and ITOS spacecraft, along with

Nimbus, NOAA, GOES and others [see Section 14]. Superimposed on the cloud patterns

is a generalized weather map for the region. Then, in the 1960s as man

entered space, cosmonauts and astronauts in space capsules took photos out the

window. In time, the space photographers had specific targets and a schedule,

although they also have some freedom to snap pictures at targets of opportunity.